author of Honorable Influence - founder of Mindful Marketing

As a marketing professor, I probably pay more attention to advertising than most people do, which sometimes leads to seeing similarities among ads and noticing interesting trends. I recently saw several video spots, all from different advertisers, each lampooning the ways specific nationalities/ethnicities communicate:

It’s important to note that each portrayal is intended to be funny, which is certainly common for national ads – think Super Bowl commercials. But like beauty, humor is in the eyes and ears of the beholder, so when do commercials move from silly/stupid, to annoying/irritating, to distasteful/objectionable, to repugnant/offensive? Or, more specifically, when does mocking people’s accents become unethical?

Like communication in general, humor is highly contextual, which is why there are inside jokes that only people aware of a specific backstory understand. Mocking accents can be acceptable and even desirable in certain contexts of social intimacy. For instance, two friends – one from New York City and the other from Boston – might playfully tease each other about their different food preferences, favorite sports teams, and distinct ways of speaking.

With advertising, backstories aren’t between just a few people; rather there’s common knowledge and shared experiences among people regionally, nationally, or even globally, some of which are positive and others, negative. Advertisers should be especially sensitive to the latter.

At some point in their lives, most people probably have had someone comment on the way they talk, perhaps in a complimentary way or maybe critically. However, some people endure daily comments about their accents that often turn into ridicule and even racism. Unfortunately, it’s not hard to find examples of such verbal abuse online, like the following:

- Terry Nguyen is an effective writer, but she thinks twice before speaking because she sometimes mispronounces words, which came from growing a home with two Vietnamese parents who spoke rough English.

- Sharada Vishwanath tells the story of a classmate imitating her Indian accent, which began as lighthearted and fun but quickly changed to annoying and offensive, as the agitator mentioned the words curry and cheaper.

Belittling people because of the way they talk can be “linguistic racism,” which in work environments may cause those targeted to refrain from speaking and to miss opportunities for professional advancement.

So, do the commercials mentioned at the onset represent linguistic racism? Possibly. An important distinction is whether depictions are of race vs. ethnicity vs. nationality. Another issue is that not all accent imitation is the same – as mentioned earlier, the interpretation of any communication is partly a function of the backstory, or broader context.

Historically, in many English-speaking western nations, people of color from places like Asia, Africa, and Latin America have been the recipients of far more accent abuse than Europeans whose first language is not English. For Terry and Shandra, mentioned above, criticism of their speaking is not a one-off experience but a regular occurrence.

Over the past century, television shows and movies have cast Asian, Black, Latino, and Native American actors and often had them speak broken and improper English, which has contributed to shameful stereotypes of people of color being less intelligent and more socially inept.

One common troupe is that of an Asian who adds “ee” to the ends of words (e.g., “talkee”), omits definite articles (e.g., this, that), and replaces L’s with R’s (e.g. Herro instead of Hello). In one of its final scenes, the classic Christmas movie A Christmas Story employs this Asian stereotyping.

Indians are also frequently stereotyped for a particular style of English speaking. Such accent mocking is what led The Office’s Kelly Kapoor in Season 1 to slap her bigoted boss Michael Scott. For decades, the animated TV show The Simpsons lampooned Indians through its reoccurring character Apu Nahasapeemapetilon, until he was finally removed from the show in 2017. Imitation of Indian accents is so common that there is a word for it – brown voice.

Ridicule is bad enough, but “At worst, linguistic racism can lead to deprivation in education, employment, health and housing,” as benefits and opportunities are sometimes withheld from those who talk differently. Perpetuating negative perceptions easily leads to social stigmas that carry significant physical and economic consequences.

However, mockery of the accents of French, Dutch, Germans, and other Europeans who are not native English speakers is not only much less common, when comments about their accents are offered, they usually take on a different tenor. Their speech is more often complimented as sounding cute, sexy, or sophisticated, whereas that of Asians and Indians tends to be criticized for grammatical errors and pronunciation mistakes.

So, does this asymmetry in experience make it acceptable to mock Europeans’ accents? I’d like to offer three reasons why it does not:

1. People are still hurt. Isabelle Duff, a native of Ireland whose job took her to London, recounts how she often felt harassed by coworkers who continually imitated her Irish accent. Scottish actor Billy Boyd, who played “Pippin” in Lord of the Rings, refuses roles, common in scripts, that call for an incomprehensible Scottish accent, which is an unfair and demeaning stereotype of Scots. A similar example is the unintelligible babble of Sesame Street’s Swedish Chef, who many Swedes don’t find funny.

2. Wrong for one should mean wrong for all. There aren’t many examples in ethics where compelling cases can be made that it’s okay to harm certain groups of people, but not others. A proponent of capital punishment might argue it’s right to execute murders but not others; however, murders aren’t a distinct, demographically identifiable people group. Also, unlike Scots, Irish, and Swedes, murderers have done things that arguably warrant differential treatment.

3. Don’t imply permission. People expect consistency. If a parent tells one child they can stay up late, their sibling will expect the same privilege. So, if it’s acceptable to mock Scots, some people will deduce that it’s okay to mock Indians too. The safest approach is to not offer any basis for making that inference by maintaining that it’s inappropriate to mock the speech of any people group.

When we open our mouths to speak, funny things sometimes come out. It’s okay to laugh about those silly sounds and statements with people we know, in the right context, and with pure intent.

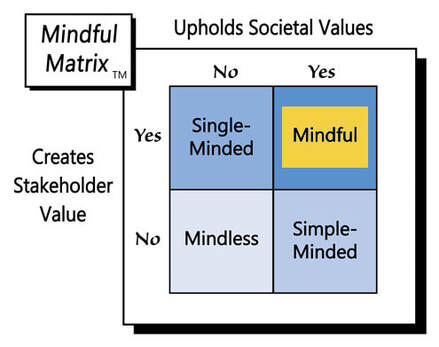

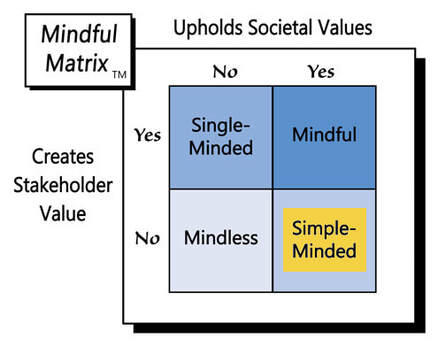

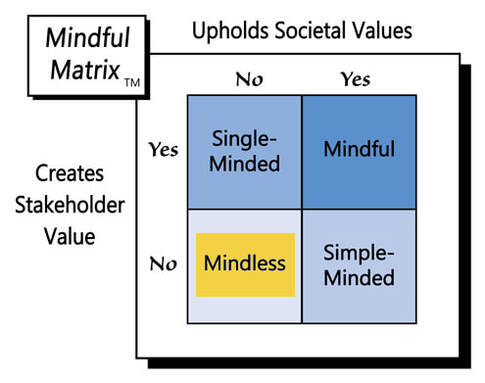

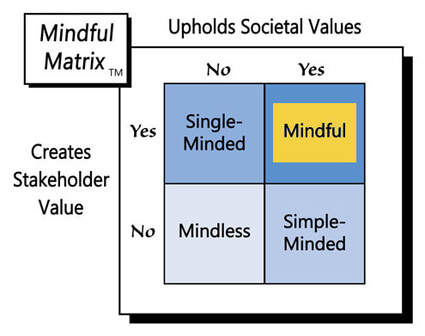

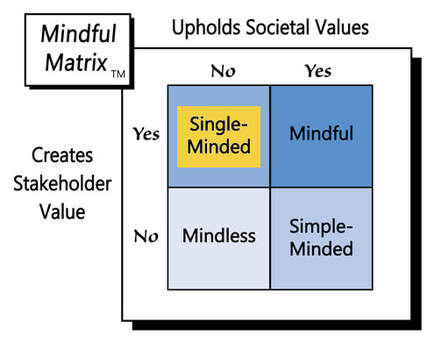

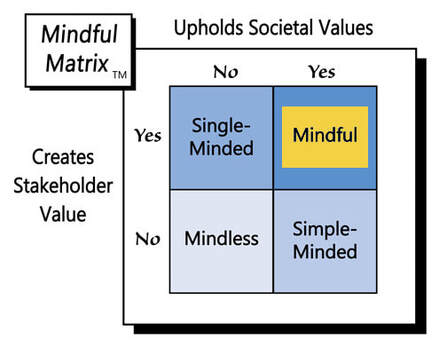

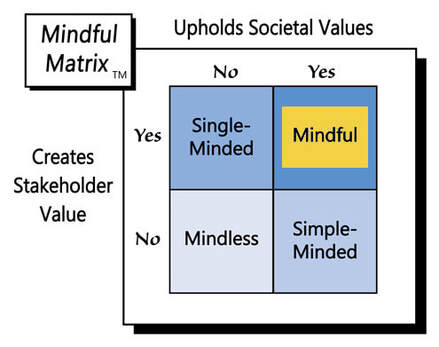

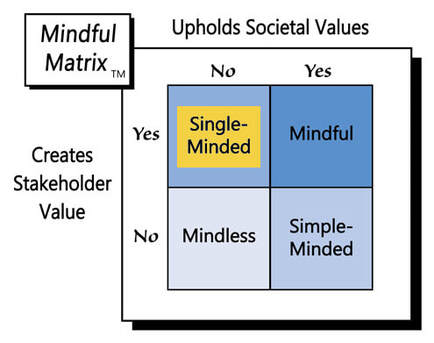

However, the standards that fit individual incidences cannot be morally stretched to cover broad cases involving the accents of entire people groups. Although it may seem funny and be effective, advertising that mocks the speech of any race, ethnicity, or other demographic should be considered “Single-Minded Marketing.”

Learn more about the Mindful Matrix.

Check out Mindful Marketing Ads and Vote your Mind!

RSS Feed

RSS Feed