author of Honorable Influence - founder of Mindful Marketing

Parade Magazine offers those interested in Super Bowl commercials the opportunity to “watch every ad.” At the time of this writing, the publication had cataloged two dozen spots, and close to half of those ads, 10 of 24, or 41.7% were for alcohol:

- Bud Light – 1

- Budweiser – 2

- Busch – 1

- Royal Crown – 1

- Michelob Ultra – 4

- Samuel Adams – 1

Granted, these were only the ads that sponsors released early – many more air during the actual game. Still, the number of intoxicating spots already has increased substantially from last year.

During the 2022 game, there were 80 ads, only seven of which were for alcohol. So, even if no more alcohol commercials aired than those listed above, four additional ads equal an increase of 42.9%

It’s understandable that marketers of alcoholic and other mass-consumed products are drawn to the Super Bowl since its ads reach consumers in party situations, e.g., when they’re kicking back, eating nachos, and drinking beer with others. Even though by game-time, most people have already purchased all the refreshments they need, the Super Bowl’s strong association with food and drink may make those ads memorable later when purchasing the same products for future use.

Of course, the size of the Super Bowl audience also makes alcohol companies salivate. Over the last decade, between 91.6 million and 114 million Americans have watched the game, making it an unmatched medium for reaching in one fell swoop a very wide swath of the population. Last year, viewers totaled 99.18 million, or about 30% of the current U.S. population of 333 million.

Such a large portion of the population naturally means audience members ranging from four to 94, and every age in between. Moreover, a substantial number of viewers are undoubtedly under the age of 21 – it’s hard to know exactly how many, but given that those younger than 18 represent 22% of the population, is reasonable to believe that the number of viewers younger than 21 is above 20 million (99.18 million x .22 = 21.81 million).

So, somewhere around 20 million young people who cannot lawfully consume alcohol will watch the Super Bowl, where they’ll see 10 or more ads for alcohol – is such exposure legal?

The Federal Trade Commission (FTC) stipulates that “no more than 28.4% of the audience for an [alcohol] ad may consist of people under 21, based on reliable audience data.” It’s unlikely that those under 21 comprise 28.4% or more of all Super Bowl viewers, but as estimated above, the percentage is still high. Furthermore, even a small percentage of all Super Bowl viewers is a very large number of people.

The FTC also warns that “ad content should not appeal primarily to people under 21,” which is an even more subjective judgment. The ten ads listed above don’t contain bright colors, cartoon characters, or other elements that would appeal to preschool and elementary age kids, but they’re not the main concern for the alcohol ads’ possible youth appeal: It’s more likely those age 15 to 20.

Although Michelob Ultra’s Dynamic Duo ad featuring white-haired actor Brian Cox and tennis legend Serena Williams playing golf probably does not have great appeal to this demographic, some others may:

- Budweiser’s Six Degrees of Bud spot contains a sequence of young basketball players grabbing some beers after a pick-up game, before transitioning to what seems like a hip hop recording session.

- Busch’ Survival Skills spot parodies those heart-wrenching animal rescue ads, representing the same kind of irreverent humor that companies increasingly use to appeal to Gen Zs.

- Michelob’s Cinderella Story commercial is also a parody, this one of a famous Bill Murray scene in Caddyshack. Those age 15 to 20 were not yet born when the movie debuted in 1980, but they are likely drawn in by the ad’s irreverent humor courtesy of former NFL quarterback and popular CBS football broadcaster Tony Romo.

Do these three ads appeal “primarily to people under 21”? It’s hard to say without conducting empirical research, such as an attitude survey. The commercials likely appeal to those 21 and older too, but it’s possible that the younger segment likes them more.

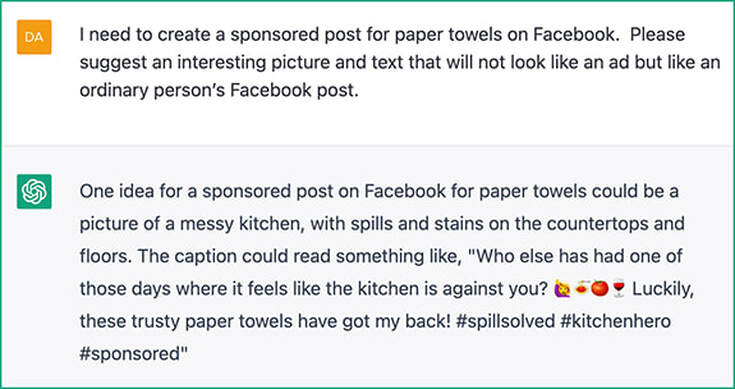

Regardless, there’s a psychological phenomenon, key to learning and critical to advertising, that the FTC guidelines inexplicably overlook: repetition.

The more often we see or hear something, the more likely we are to remember it, which is why television commercials rarely air just one time. Instead, they run on specific schedules and at particular intervals, which over time serves to affix their messages in consumers’ minds.

With four Super Bowl ads, Michelob Ultra has achieved some significant repetition for its brand. Taking all the game’s alcohol ads together, ten-plus spots during a single television event also has likely increased awareness, influenced perceptions, and impacted intent to consume alcohol for many in the viewing audience, including those under the age of 21.

Can the abundance of alcohol ads and their potential to encourage underage drinking be pinned on any single Super Bowl sponsor? No. The cumulative impact of so many ads is mainly the responsibility of the game’s broadcaster, which this year was Fox.

Fox sold out all of the ad inventory for this year’s contest; in fact, 95% of the available slots were gone by last September, with some 30-second spots selling for more than $7 million. The network did hit some speed bumps, however, when the economy slowed and cryptocurrencies faltered, causing some committed advertisers to ask for relief.

Fortunately for Fox, it was able to rebound, thanks in part to certain existing advertisers’ willingness to buy even more time – maybe that’s what happened with Budweiser and Michelob. So, perhaps Fox didn’t intend to increase alcohol advertising in this year’s Super Bowl by 43%, but it did allow it.

To be fair, 10 or 12 ads for alcohol is still a relatively small number compared to 80 or so total Super Bowl commercials. But what if the proportion continues to creep upward to or beyond 20 ads, making them one-fourth or more of the total? Given the recent growth of hard seltzers and now canned cocktails, more advertising demand from adult beverage makers is likely coming.

At the same time, there doesn’t appear to be any FTC regulation against such alcohol ad creep. The two provisos listed above (no more than 28.4% of the audience under 21 and the ads not primarily appealing to the younger demographic) seem to be the only stipulations.

Of course, the reason for discussing this promotion is that too much alcohol can tragically alter and end lives, especially for young people who are not used to its potency and who tend to underestimate their own mortality.

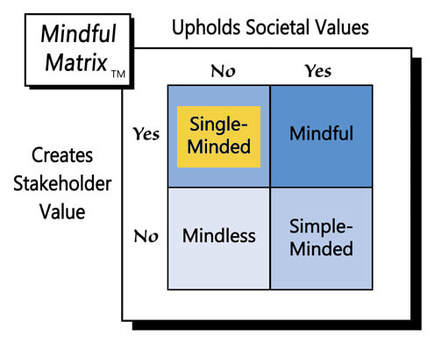

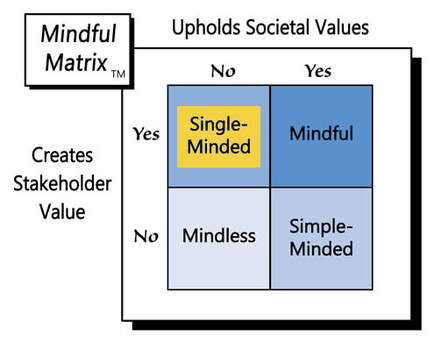

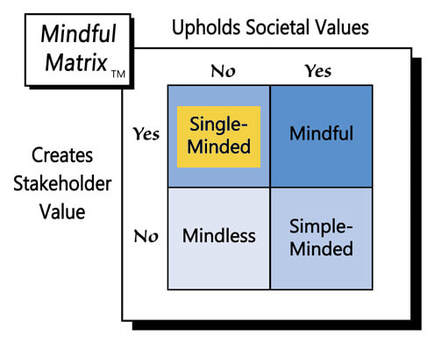

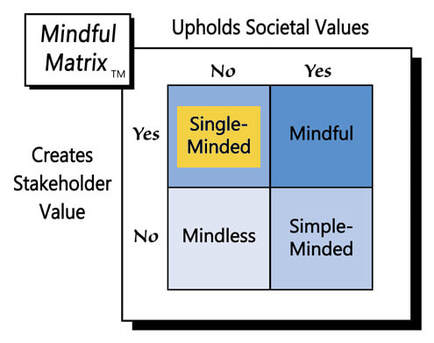

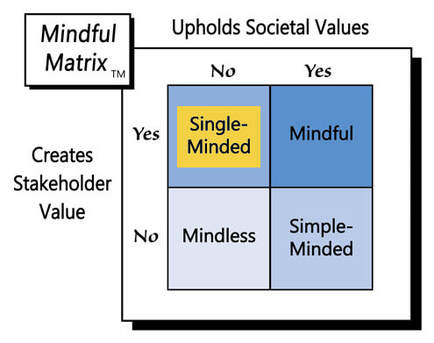

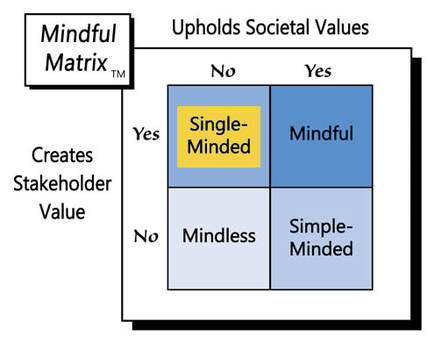

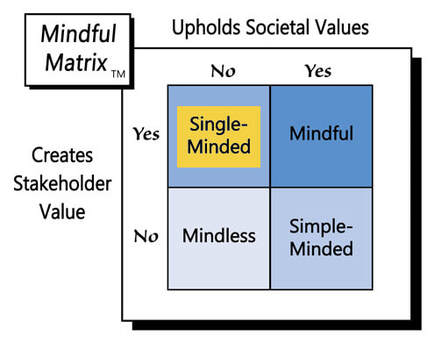

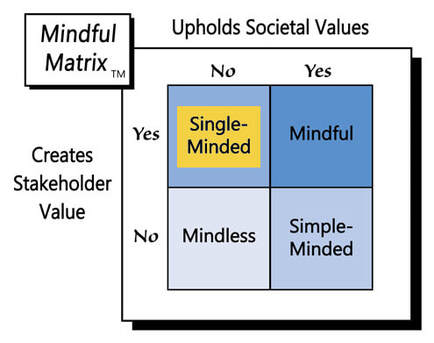

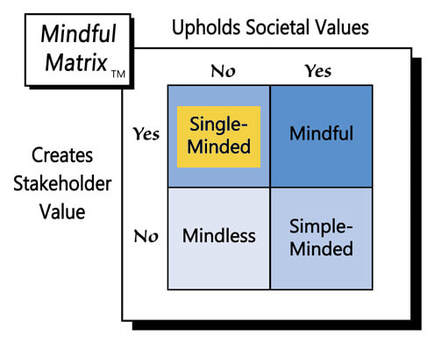

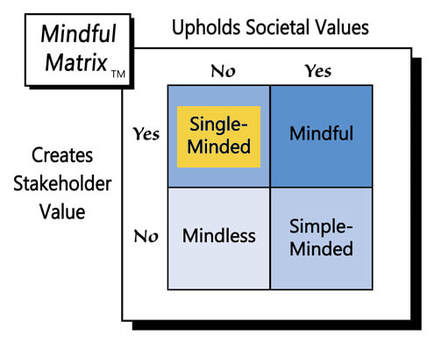

Over the last decade, “Drink responsibly,” has become a helpful catchphrase for encouraging sensible alcohol consumption. Firms that brew alcoholic beverages and networks that broadcast their ads should think more deeply about what it means to advertise responsibly. Otherwise, an unabated rise in alcohol ads will lead to a stupor of “Single-Minded Marketing.”

Learn more about the Mindful Matrix.

Check out Mindful Marketing Ads and Vote your Mind!

RSS Feed

RSS Feed