author of Honorable Influence - founder of Mindful Marketing

Nyla Anderson was a “happy child” and “smart as a whip”—she even spoke three languages. Tragically, the 10-year-old Pennsylvania girl’s life was cut short on December 12, when she died while attempting a perilous social media trend called the Blackout Challenge.

The Blackout Challenge “requires the participant to choke themselves until they pass out and wake up moments later.” Sadly, some who participate, like Nyla, never wake up, and if they don’t die, they may suffer seizures and/or brain damage.

It’s tragic, but young people likely have engaged in foolhardy, life-threatening behavior since the beginning of humankind. Within a few years of my high school graduation, two of my classmates lost their lives in separate car crashes caused by high-speed, reckless driving. Most people probably can share similar stories of people they knew who needlessly died too young.

In some ways it’s inevitable that young people’s propensity for risk-taking paired with a limited sense of their own mortality will lead them to endanger themselves and encourage others to do the same. What’s inexplicable is how older and presumably more rational adults can encourage and even monetize such behavior, which is what some suggest TikTok has done.

Unfortunately, Nyla is not the only young person to pass away while attempting the Blackout Challenge. Other lives the ill-advised trend has taken include 12-year-old Joshua Haileyesus of Colorado and 10-year-old Antonella Sicomero of Palermo, Italy. TikTok provided the impetus for each of these children to attempt the challenge.

Most of us know from experience that peer influence can cause people to do unexpected and sometimes irrational things. In centuries gone by, that influence was limited to direct interpersonal contact and then to traditional mass media like television. Now, thanks to apps like TikTok, anyone with a smartphone holds potential peer pressure from people around the world in the palm of their hand.

In TikTok’s defense, the Blackout Challenge predates the social media platform. ByteDance released TikTok, or Douyin as it’s known in China, in September of 2016. Children had been attempting essentially the same asphyxiation games, like the Choking Challenge and the Pass-out Challenge, many years prior. In fact, the Centers for Disease Control and Prevention (CDC) reported that 82 children, aged 6 to 19, likely died from such games between 1995 and 2007.

It’s also worth noting that individuals and other organizations create the seemingly infinite array of videos that appear on the platform. ByteDance doesn’t make them, it just curates the clips according to each viewer’s tastes using one of the world’s most sophisticated and closely guarded algorithms.

So, if TikTok didn’t begin the Blackout Challenge and it hasn’t created any of the videos that encourage it, why should the app bear responsibility for the deaths of Nyla, Joshua, Antonella, or any other young person who has attempted the dangerous social media trend?

It’s reasonable to suggest that TikTok is culpable for the self-destructive behavior that happens on its premises. A metaphor might be a property owner who makes his house available as a hangout for underage drinking. The homeowner certainly didn’t invent alcohol, and he may not be the one providing it, but if he knowingly enables the consumption, he could be legally responsible for “contributing to the delinquency of a minor.”

By hosting Blackout Challenge posts, TikTok could be contributing to the delinquency of minors.

I have to pause here to note an uncomfortable irony. Less than four months ago, just after Francis Haugen blew the whistle on her former employer Facebook, I wrote a piece titled “Two Lessons TikTok can Teach Facebook.” In the article, I described specific measures TikTok had taken to, of all things: 1) discourage bad behavior, and 2) support users’ mental health.

How could I have been so wrong? Although I certainly may have been misguided—it wouldn’t be the first time—TikTok’s actions that I cited truly were good things. So, maybe the social media giant deserves to defend itself against the new allegations.

TikTok declined CBS News’ request for an interview, but it did claim to block content connected to the Blackout Challenge, including hashtags and phrases. It also offered this statement, “TikTok has taken industry-first steps to protect teens and promote age-appropriate experiences, including strong default privacy settings for minors."

The notion of protecting teens is certainly good; however, it’s hard to know what “industry-first steps” are. Furthermore, prioritizing age-appropriateness and privacy are important, but neither objective aligns particularly well with the need to avoid physical harm—the main problem of the Blackout Challenge.

In that spirt and in response to accusations surrounding Nyla’s death, TikTok offered to Newsweek a second set of statements:

“We do not allow content that encourages, promotes, or glorifies dangerous behavior that might lead to injury, and our teams work diligently to identify and remove content that violates our policies.”

"While we have not currently found evidence of content on our platform that might have encouraged such an incident off-platform, we will continue to monitor closely as part of our continuous commitment to keep our community safe. We will also assist the relevant authorities with their investigation as appropriate."

These corporate responses do align better with the risks the Blackout Challenge represents. However, there’s still a disconnect: TikTok claims it’s done nothing to facilitate the Blackout Challenge, but family members of those lost say the social media platform is exactly where their children encountered the fatal trend.

The three families’ tragedies are somewhat unique, but they’re far from the only cases of people seeing the Blackout Challenge on TikTok and posting their own attempts on the app. TikTok has taken measures that have likely helped ‘lessen the destruction,’ but it’s unreasonable for it to claim exoneration.

The company’s app must be culpable to some degree, but what exactly could it have done to avoid death and injury? That question is very difficult for anyone outside TikTok or without significant industry expertise to answer; however, let me ask one semi-educated question—Couldn't TikTok use an algorithm?

As I’ve described in an earlier blog post, “Too Attached to an App,” ByteDance has created one of the world’s most advanced artificial intelligence tools—one that with extreme acuity serves app users a highly-customized selection of videos that can keep viewers engaged indefinitely.

Why can’t TikTok employ the same algorithm, or a variation of it, to keep the Blackout Challenge and other destructive videos from ever seeing the light of day?

TikTok is adept at showing users exactly what they want to see, so why can’t it use the same advanced analytics with equal effectiveness to ‘black out’ content that no one should consume?

The truism ‘nobody’s perfect’ aptly suggests that every person is, in a manner of speaking, part sinner and part saint. TikTok and other organizations, which are collections of individuals, are no different, doing some things wrong and other things right but hopefully always striving for less of the former and more of the latter.

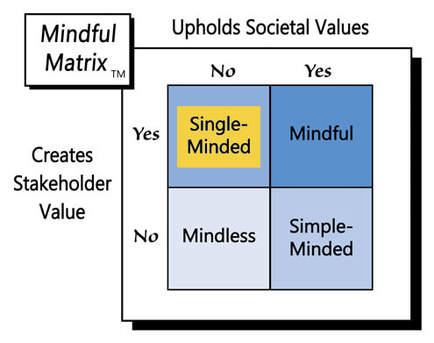

Based on its statements, TikTok likely has done some ‘right things’ that have helped buffer the Blackout Challenge. However, given the cutting-edge technology the company has at its disposal, it could be doing more to mitigate the devastating impact. For that reason, TikTok remains responsible for “Single-Minded Marketing.”

Learn more about the Mindful Matrix.

Check out Mindful Marketing Ads and Vote your Mind!

RSS Feed

RSS Feed