author of Honorable Influence - founder of Mindful Marketing

It’s been hard to find news feeds recently that haven't featured Facebook. The iconic social network that’s often been the focus of questions from citizens and senators, was back in the spotlight after a former Facebook employee-turned-whistleblower appeared on 60 Minutes and exposed a series of alleged corporate abuses, most impacting consumers.

Francis Haugen is a 37-year-old data scientist and Harvard MBA who has worked for a variety of top-tier social media firms for 15 years, including a two-year tenure at Facebook. In her October 3rd interview on 60 Minutes, she didn’t pull punches in portraying what she believes is her former employers’ danger to society. Among her accusations were:

- Facebook’s algorithms systematically amplify angry and divisive content, which are rewarded with more revenue, as other content doesn’t receive adequate returns.

- Facebook employees are compelled to curate polarizing posts in order to drive site traffic, maintain user engagement, and ultimately keep their jobs.

- “Facebook has set up a system of incentives that is pulling people apart.”

Two days later, Haugen testified before a Senate subcommittee, where she made several other stinging revelations:

- Facebook has ways of determining people’s ages and could be doing much more to identify users younger than 13.

- Hate speech and misinformation boosts meaningful social interaction (MSI), a key Facebook metric to which employee bonuses are tied.

- Facebook’s “amplification algorithms” and “engagement-based ranking” drive young people to destructive online content, resulting in bullying, body image issues, and mental health crises.

Facebook has responded to Haugen’s accusations, including with a written statement to 60 Minutes in which it claims that polarization has decreased in countries where internet and Facebook use has risen. Also, in a Facebook post, CEO Mark Zuckerberg has suggested that Haugen’s revelations represent “a false picture of the company” and that the idea that the firm prioritizes profit above safety and well-being is “just not true.”

Unlike Haugen and Zuckerberg, most of us have no window into Facebook’s innerworkings. At best, we’re just one of world’s largest social media platform’s 2.7 billion monthly active users, meaning we have no way of knowing whose representations are really true.

Human nature and history tell us that both sides are likely right in some ways, and perhaps responsible for certain misrepresentations. That said, many people have experienced firsthand Facebook feeds strewn with angry and polarizing posts. Likewise, the company’s recent decision to pause its work on an Instagram product for children under age 13 seems to reflect some sense of mea culpa.

In short, it’s becoming ever-more-apparent, even to nominal social media users, that there are important issues Facebook needs to address more effectively. The question, then, becomes, “Who can teach Facebook how to rehabilitate its social impact?”

It must be hard for one of the largest and most influential companies in the world to accept advise from anyone, including members of congress, as evidenced during Zuckerberg’s many visits to testify on Capitol Hill.

That doesn’t mean that government regulation isn’t effective. It plays a critical behavior-modifying role. However, there are natural delays in passing legislation, and those lag-times are often exacerbated by the speed at which social media and related technology change. Furthermore, members of congress typically don’t understand an industry as well as those who work in it, particularly when the industry involves high-tech.

So, who also lives at the cutting edge of technology and could influence Facebook toward more positive social impact? One particular competitor could—TikTok.

I admit; on the surface, this suggestion seems almost ridiculous: With its own algorithms driven by artificial intelligence, isn’t TikTok part of the same problem?

In fact, I’ve expressed my misgivings about the influence of the widely-popular app that Search Engine Journal describes as having “the fastest growth of any social media platform.” In the end, however, I concluded that users’ abilities to restrict or stop using TikTok suggested that it was not truly addictive.

Of course, ‘not being part of the problem’ doesn’t necessarily mean that TikTok can be part of a Facebook solution. However, the social media upstart has recently taken two initiatives that align squarely with two of the main principles that Haugen suggested Facebook must learn:

1. To discourage bad behavior: Compared to the millions and millions of videos available on TikTok, it was admittedly a minor move when the app recently began to ban posts that referred to stealing school property—a disturbing late-summer trend among teens. Still, the moral stand that the company took shouldn’t be diminished. A TikTok spokesperson explained the ethos:

“We expect our community to stay safe and create responsibly, and we do not allow content that promotes or enables criminal activities.”

2. To support users’ mental health: Also about a month ago, TikTok unveiled “a slew of features intended to help users struggling with mental health issues and thoughts of suicide.” Among the app-related resources are well-being guides for those struggling with eating disorders and a search intervention feature that activates if a user enters a term like “suicide.”

Facebook’s challenges to more effectively discourage bad behavior and to support mental health may be somewhat unique, both in terms of their nature and magnitude. Still, TikTok now has 1 billion monthly users, up from 700 million just a year ago, and those users seem to deal with many of the same social concerns that Facebook users do.

Businesses routinely learn from others, often by observing and emulating them (e.g., developing new products). Facebook certainly can and likely does already do that, but maybe there’s another level of within-industry education that could occur.

This suggestion may be the most ridiculous one yet, but what if Facebook and TikTok cooperated? What if the two companies ‘compared notes’ and in some way worked together to address the physical, emotional, and social challenges that threaten both their users?

Of course, imaging any cooperation between such large and close competitors is practically unthinkable, but it's not unprecedented. Several decades removed, both Harvard Business Review (1989) and Forbes (2019) published articles citing such partnership examples, like General Motors and Toyota, and explaining the win-win outcomes that accrued from such “coopetition.”

What might Facebook and TikTok’s motivations be for cooperating? Perhaps they both would like to avoid probable government regulation. Or, they may want to see how they can advance themselves, without compromising their competitive positions.

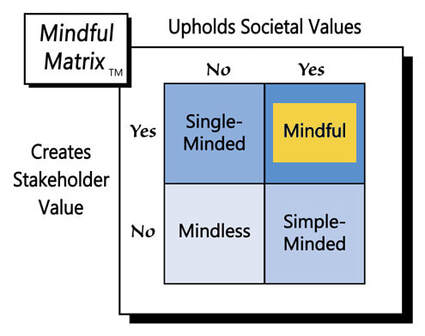

Moreover, maybe Facebook and TikTok can recognize that personal and societal well-being are what matter most, and together they have the power to shape it like few others can. Actually, all three of motivations have merit and together they certainly represent “Mindful Marketing.”

Learn more about the Mindful Matrix.

Check out Mindful Marketing Ads and Vote your Mind!

RSS Feed

RSS Feed