Amazon, the giant etailer and purveyor of just about everything, recently added to its line of artificial intelligence products a new piece of hardware called the Echo Look, which is able to tell people what to wear, or not wear. The company’s succinct description of the device is it’s a “hands-free camera and style assistant.” Amazon also offers a fuller account of features and benefits on its website:

“With Echo Look, you can take full-length photos of your daily look using just your voice. The built-in LED lighting and depth-sensing camera let you blur the background to make your outfits pop, giving you clean, shareable photos. Get a live view in the Echo Look app or ask Alexa to take a short video so you can see yourself from every angle. View recommendations based on your daily look and use Style Check for a second opinion on what looks best. And, because Alexa is built in the cloud, she’s always getting smarter—and so will Echo Look.”

Amazon already boasts a burgeoning family of Alexa-enabled Echo products ranging from Echo Dots, to the standard Echo, to a new Echo Show. For over two years, hands-free functionality and voice recognition technology connected to the cloud have allowed users to get answers to questions like “Is it going to rain?” and “What’s on my calendar?” So, what’s so special about asking about style?

The big difference is that most, if not all, of the questions Alexa accepts on other devices can be answered with objective information. Reporting the current outside temperature or one’s afternoon appointments doesn’t take judgment; it just requires rapid retrieval of information and accurate regurgitation of facts. However, giving fashion guidance, or any advice for that matter, is a "whole nother ballgame."

Advice is subjective; it’s primarily based on opinion. Granted, that opinion can take factual information into account—the best advice usually does—but the bottom-line is always a judgment call. In terms of clothing, it’s relatively easy to answer objective questions, e.g., “Is this shirt blue or green?” It’s much more challenging to answer subjective ones like “Which shirt looks better on me, the blue one or the green one?” So, how do Amazon and Alexa do it?

The Echo Look website gives some clues, as it says that the device’s Style Check feature “combines the best in machine learning with advice from fashion specialists.” A somewhat more technical explanation is that Style Check uses algorithms. Dataconomy.com provides some helpful explanation of how machines use algorithms to learn, or develop artificial intelligence:

“Machine learning is a type of artificial intelligence (AI) where computers can essentially learn concepts on their own without being programmed. These are computer programmes that alter their “thinking” (or output) once exposed to new data. In order for machine learning to take place, algorithms are needed. Algorithms are put into the computer and give it rules to follow when dissecting data.”

Granted, this description still doesn’t quite explain how technology translates factual information into opinion, but one can imagine that the application of enough decision rules and processing power can eventually allow for the formation of limited expert advice, aimed at a narrow range of easily observable behavior, such as the clothes on our backs.

So, that’s a little about what the Echo Look does and how Amazon does it, but another very compelling question remains—Why? The company offers the Echo Look, with all the features of the standard Echo, for just $20 more ($200 vs. $180). That’s not much more revenue for some significant functionality. Likewise, it seems that the Look could easily cannibalize the standard Echo, i.e., people choose to buy the former instead of the latter. So what’s the rationale for Amazon’s strategy?

As the Wall Street Journal suggests, it’s all about the potential to sell more clothes. Sure, the earnings from the sale of thousands of new hardware devices will be nice, but Amazon is interested in continuing to crack a much bigger nut—the $2.4 trillion global clothing market.

Having purchased online footwear icon Zappos and introduced its own private label clothing, Amazon is already the leading fashion retailer. Last October, Cowen & Co. reported the Amazon was about to surpass the former leader, Macy’s, which has had declining sales. What’s more, there’s potential for Amazon to growth is market share of clothing much, much bigger. According to the Wall Street Journal, the Echo Look also recommends new clothes to its users, which they can buy from Amazon using just a voice command.

Currently, Amazon accounts for a whopping 43% of all online retail sales in the U.S; however, it’s market share of clothing is ‘just’ 6.6%. Analysts at Cowen & Co. expect that share to increase to 8.2% in 2018 and to 16.2% in five years. The Echo Look will likely help drive that growth and perhaps more.

This sales data, past and projected, is informative, but it still doesn’t answer the moral question: Should Amazon or any other clothing retailer market a technology that tells people what to wear? Is the Echo Look and its cloud-based Style Guide software ethical?

Understandably, privacy is a primary concern, especially given the nature of the device: taking rather personal pictures of people, in their homes, and storing them in the cloud. All of this information can be very enticing to hackers, some of whom would even have the potential to commandeer the device’s video camera. An article by Business Insider, however, allays some of these concerns, noting that Echo Look has an easily accessible off-switch, and even when powered on, the device does not listen until it hears a specific “wake” word.

Besides such concerns of creepiness, another problem some may have with the Echo Look is the idea that it’s replacing people: We are not interacting with family and friends if we’re asking a digital device what to wear. That’s a legitimate concern, though, it’s nothing new—for a long time, technology has and will continue to shape our interpersonal interaction. Tech can hurt or help such communication, depending how it’s used. Actually, the Echo Look might alleviate some angst in relationships by handling uncomfortable questions like “How do I look in this?”

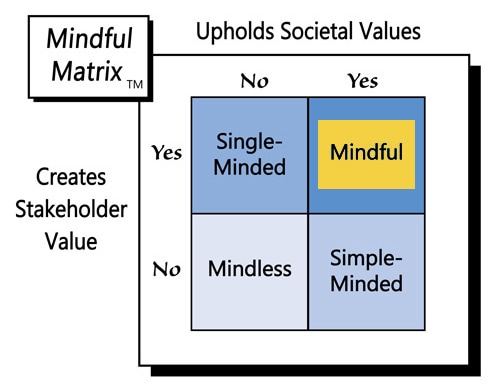

Amazon is already very big, and it’s poised to become even bigger with an increased focus on fashion. The firm’s growth has been due to many factors, including that it consistently offers consumers good value. Given an aggressive price point and a feature/benefit bundle that will only get better, the Echo look is likely to continue that tradition, without posing any significant societal concerns. In short, Alexa can call her own style sense a model of “Mindful Marketing.”

Learn more about the Mindful Matrix and Mindful Meter.

Check out Mindful Marketing Ads and Vote your Mind!

RSS Feed

RSS Feed